Power BI was released in 2015. Since then, it has spread like a bush fire. It has become a critical tool for many businesses, taking data from anywhere and combining it to power real-time decisions. Since its release, customers have created over 11.5 million data models worldwide, with 30,000 more added daily.

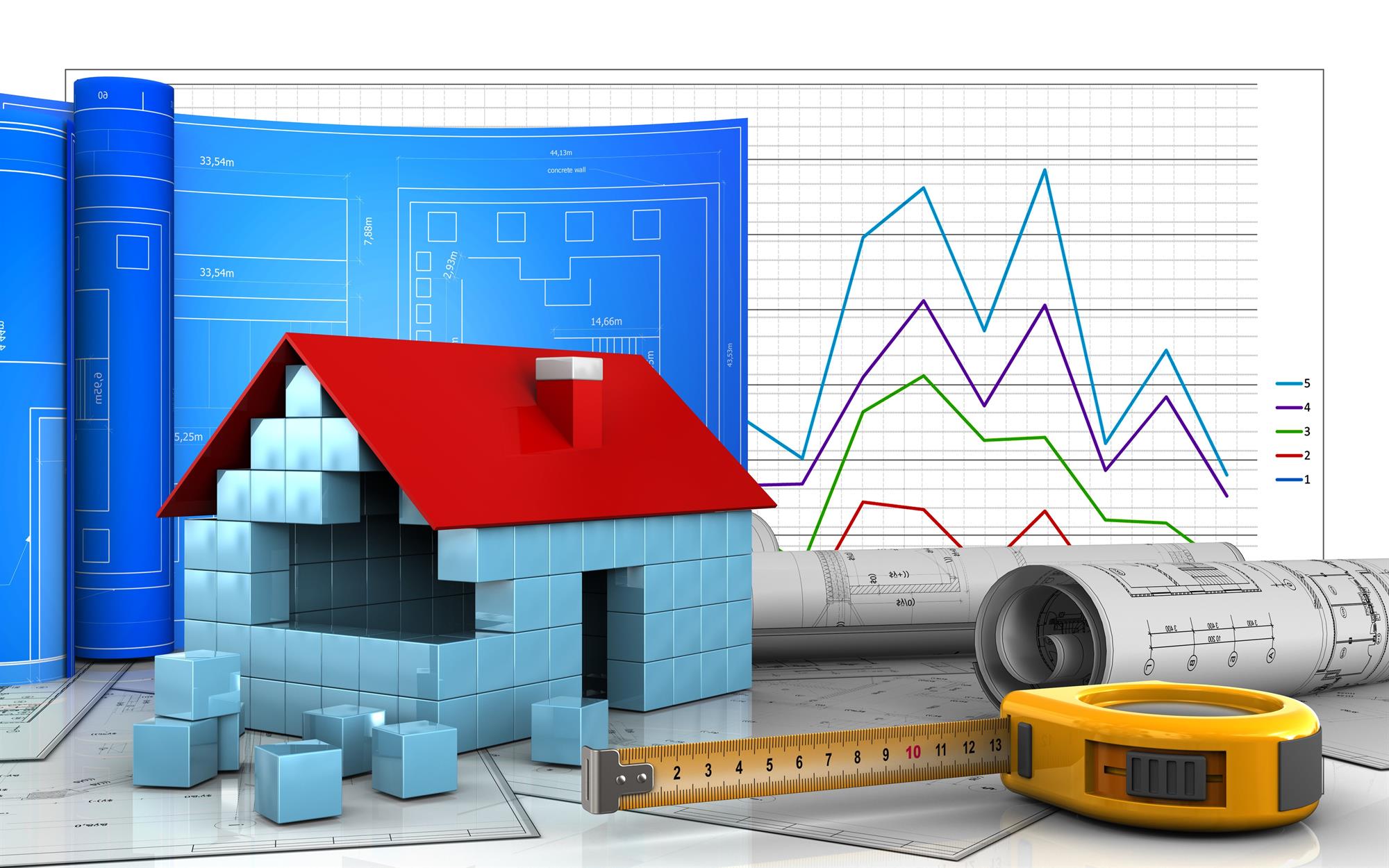

Building data models with Power BI is easy and fast. You’re not required to flatten your data into one table. Once you’ve pulled in data from multiple sources, you can create a model by simply connecting tables using relationships. However, with great flexibility comes great responsibility. It is very unlikely that the source data is already perfectly structured for the kind of analysis you want to perform. If you only connect source tables with relationships, without following data modelling principles, too often this will result in complex data models that are expensive to maintain. If the numbers you’re calculating do not match, or if the DAX formulas are too complex to maintain, you will most likely have an issue in a data model. A data model is like the foundation for your house, get it right and the subsequent construction, and the house itself, will be better.

A data model is like the foundation for your house, get it right and the subsequent construction, and the house itself, will be better.

Data modelling is a basic skill that anybody interesting in building BI reports should master. Being a good data modeler means being able to match your specific model with one of the many different patterns that have already been studied and solved. A good introduction to this topic is the book: Analyzing Data with Power BI and Power Pivot for Excel, written by M. Russo and A. Ferrari. This book will get you started, however, to become a great data modeler you will need experience, which means you might have to suffer some failures as you learn.

The first important data modelling decision is granularity, i.e. the level of detail in your tables. You must have the right level of granularity to meet your reporting needs. If your granularity is too coarse you will not be able to extract the information you want. On the other hand, fine granularity means larger and slower datasets, and potentially complex DAX formulas. For example, if your Sales table contains data for the individual transactions but you only need to report by month, quarter and year, the correct level (or granularity) would be a month.

Another critical data modelling decision is the level of normalisation. The most common reporting data sources are operational systems, such as Dynamics NAV. An operational system directly supports the execution of a business process by capturing details about significant business events or transactions. Therefore, operational databases are highly normalised and optimised for insert and update operations. While the focus of the operational system is the execution of a business process, the BI system, such as Power BI, supports the evaluation of the process. Interaction with a BI system takes place exclusively through queries that only retrieve data about business processes. When you only read data, normalisation is rarely a good technique. The database performance is better when there are fewer joins required.

Dimensional modelling has been introduced as a direct response to the unique requirements of BI systems. A star schema is the simplest form of a dimensional model, in which data is organized into facts and dimensions.

In my next blog I will introduce star schemas and their benefits.